Greptile -- AI Pull Request Reviews in 2025

Greptile: AI Pull Request Reviews in 2025 Link to heading

Code reviews are not every developer’s favorite part of software development. AI-powered pull request tools offer a balm to developer pain and toil. The process of software PRs can be full of spending time catching typos, obvious bugs and style issues. Other aspects of development can get rushed or fall behind because everyone’s review-fatigued.

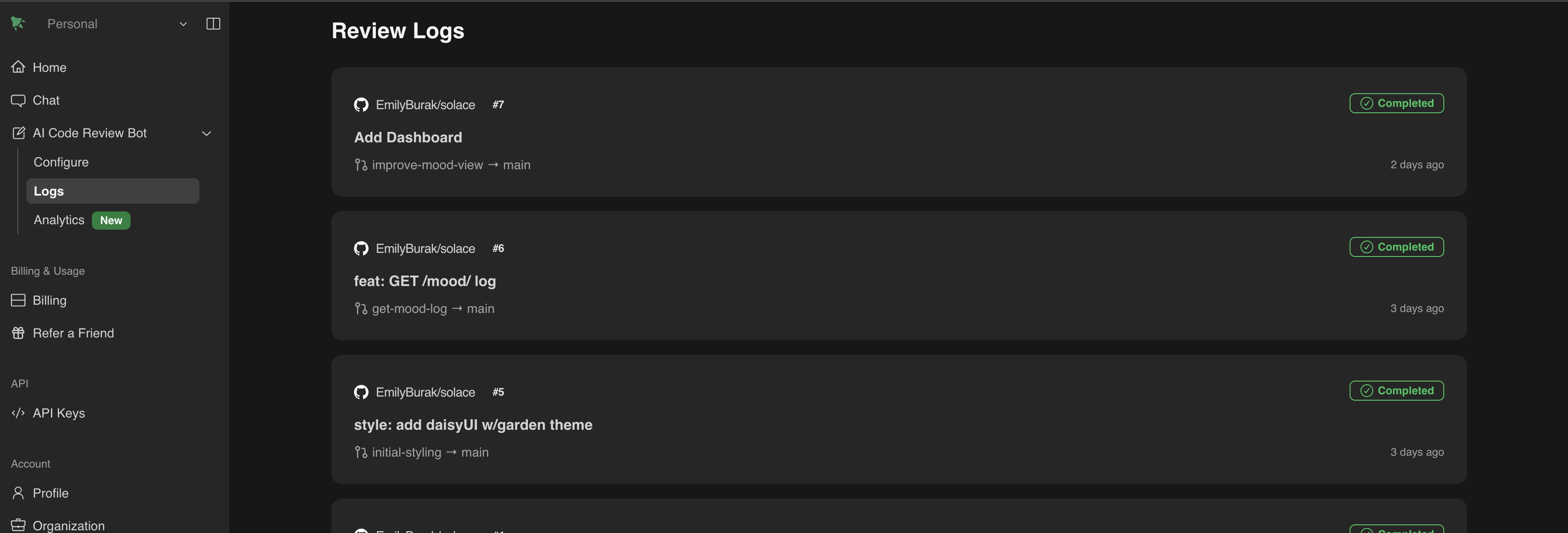

I’ve been skeptical but interested in AI PR tools. How good could they be and how much could they fulfill what I see as a promise of AI: freeing devs up for higher-level thinking in comparison to drudge work? I decided to test one (Greptile) on a personal project: Solace, which uses a Golang backend serving up an HTMX frontend.

I used three tools in the process: Greptile, GitHub Copilot, and Cursor. Each promised something a bit different from the others, and Cursor was used for parts of the actual coding process. AI did review AI-written code at points here. In this blog I’m going to focus on Greptile, because it really deserves a look, and the other two tools are getting wider adoption and name recognition anyway.

GitHub Copilot has just gone GA, while Greptile is a more mature project that I’ve been tinkering with for a bit. Greptile also includes chatting with codebases, which was my major use case to begin with.

Solace was well-suited for testing as it involved several types of changes during the time I was testing: framework migrations, database integrations, form implementations and API refactoring are examples. AI tools need to be able to handle a variety of scenarios to be useful.

Let’s start with Greptile. It really surprised me with how much context it could gleam, including the PR description: the better the description, the better the result, like with a human reviewer!

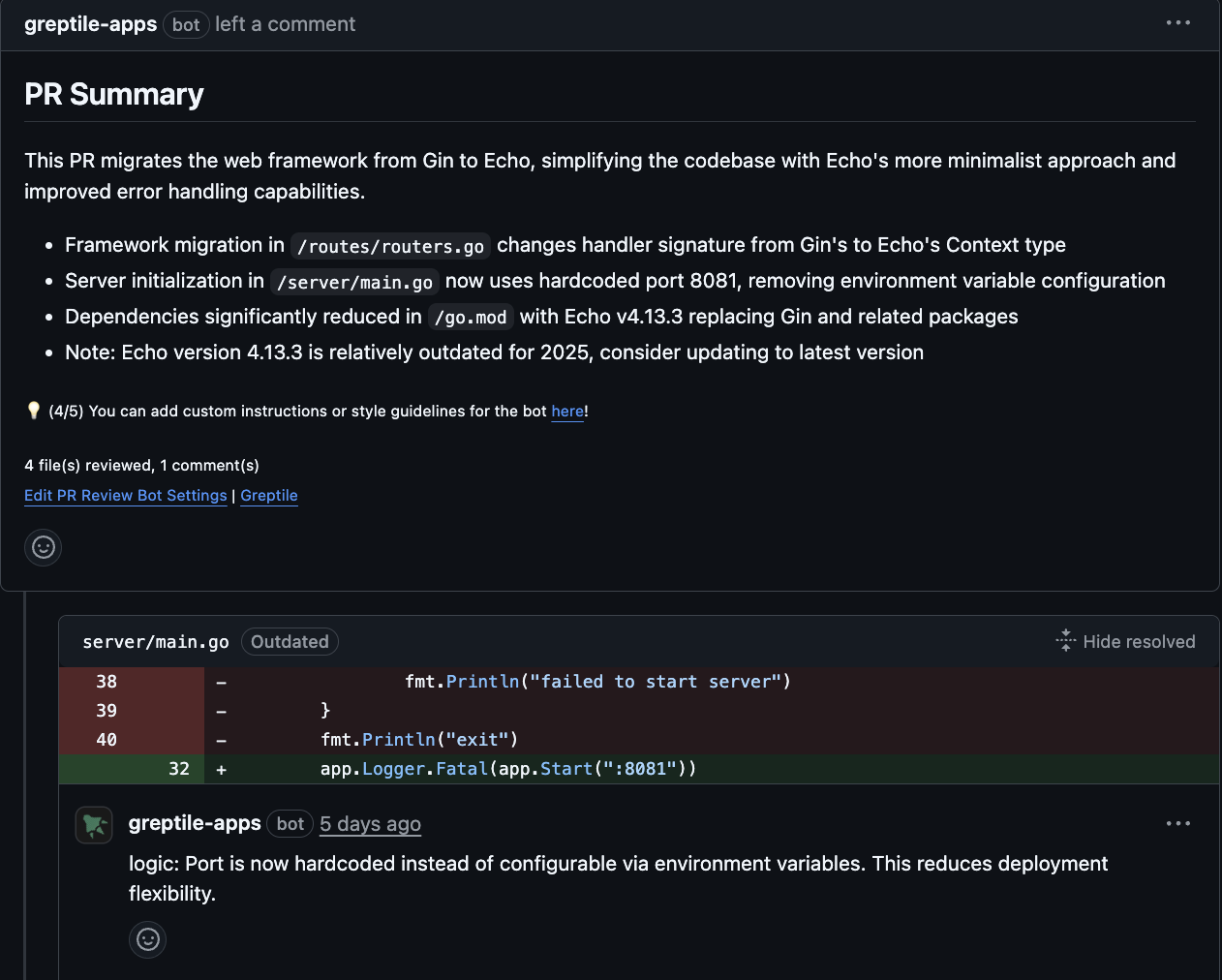

My first test was migrating Solace from Gin, a Golang web framework, to another framework called Echo. Greptile flagged an outdated echo issue, and more importantly, a hardcoded port. The tool specifically noted: “Port is now hardcoded instead of configurable via environment variables. This reduces deployment flexibility”, which seems like a real issue that could’ve been annoying later.

During implementing a landing page, Greptile caught a few issues as well — typos(‘data’ instead of ‘date’), and more complex issues such as forms missing attributes and why this would be problematic: “Form missing method and action attributes for fallback without JS” and suggested the specific fix. Greptile seemed a bit forward-facing, and able to ‘reason’ about accessibility.

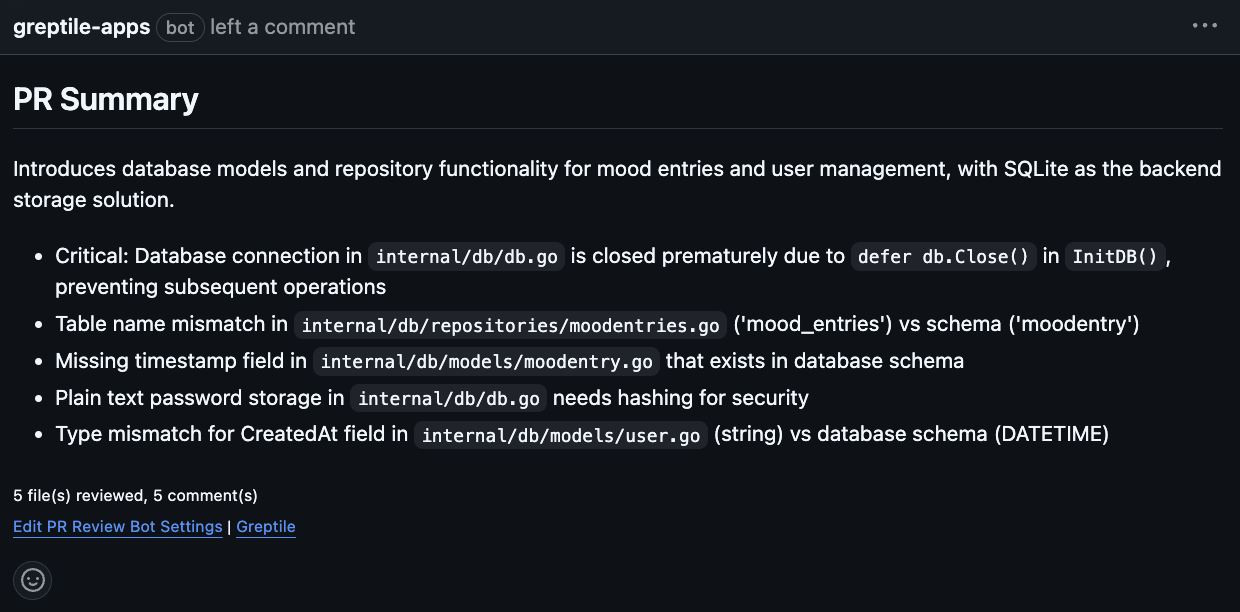

During a database integration PR, Greptile showed off. It identified several critical issues that could’ve caused production trouble: a database connection being prematurely closed, table name mismatches, and more. I didn’t fix them all yet as Solace is a very new project but I’ve noted the remaining ones down as bugs and they may not have gotten caught until later if not for Greptile. These are the kind of small-in-code one liner often issues that can get lost in manual code review.

Greptile’s got some good features, the standout being configuration flexibility through a greptile.json file. While I used the default settings, the parameters look impressive: labels to trigger reviews on specific PRs, commentTypes to specify what kinds of comments Greptile should make (logic, syntax, style, info, advice, checks, and notes), and most importantly, instructions for custom guidance. That last one is big, letting a user provide natural language instructions that can talk about specific files, specific project rules, or provide more context.

The reality is that these tools excel at certain kinds of issues. Greptile calls them ’tactical’ issues, such as bugs, obvious mistakes, redundant code, and unsafe patterns. Basic logic, security practices such as sanitization, and obvious mistakes are caught well. But humans reviewing code bring to the table strategic insights such as domain knowledge, architectural feedback, and long-term thinking. AI can flag a typo, it can’t easily weigh between decisions based on business context or trade-offs between priorities or systems.

Here’s what I learned from actually using them: check your expectations. These tools work very well tactically, but aren’t strategic like human review. Use them, like AI is often best for, to catch the boring stuff so we can focus on the higher-level understanding and tasks. Definitely play around with the configuration, that’s my next step in working with Greptile.

For me it’s not really about time saving like other AI use cases. AI can apply its ’thinking’ to every PR ’equally’(yeah, they’re just next word predictors with random elements, but catch what I’m thinking here) when a human reviewer might be tired, over-eager or stressed to ship, or burnt out. They’re also probably nice to learn small practices you have missed.

AI PR tools such as Greptile can add a lot to developer workflows. They’re not perfect, definitely not on the level of a good human reviewer, but do what they do well. For Solace, my workflow is to PR early, have Greptile be tactical about it and focus human review time on bigger picture and business logic issues. Integrating these tools effectively into your development workflow is worth doing gradually, but I think they can add real value and save toil and pain.

And that’s what we’re all looking for, right, a bit less painful process and a bit less drudgery?